How does FPGA solve the two major pain points of real-time AI?

Compared with other existing FPGA cloud platforms, the main feature of the Catapult platform is to build a global FPGA resource pool and flexibly allocate and use FPGA hardware resources in the resource pool. Compared to other solutions, this has a huge advantage for the pooling of FPGAs.

In the Catapult platform, FPGAs have become "first-class citizens" and are no longer completely limited by CPU management. In the Catapult accelerating card, the FPGA is directly connected to the TOR switch of the data center network, without the need for CPU and network card forwarding, which enables FPGAs in the same data center or even different data centers to directly interconnect and communicate through high-speed networks. Thus constitute an FPGA resource pool. The management software can directly partition the FPGA resources , thus achieving effective decoupling between the FPGA and the CPU.

FPGA pooling breaks the boundaries between CPU and FPGA. In the traditional FPGA usage model, the FPGA is often used as a hardware acceleration unit to unload and accelerate the software functions originally implemented on the CPU. Therefore, it is tightly coupled with the CPU and heavily dependent on the management of the CPU, at the same time, it is basically a clear distinction with CPU.

Second, FPGA pooling breaks the resource boundaries of a single FPGA. From a logical perspective, Catapult's data center pooled FPGA architecture is equivalent to adding a layer of parallel FPGA computing resources on top of the traditional CPU-based computing layer, and can independently achieve computing accelerately of multiple services and applications.

For artificial intelligence applications, especially those based on deep learning, many application scenarios have strict requirements for real-time, such as search, speech recognition, and so on. At the same time, for Microsoft, it has a lot of rich text AI application scenarios, such as web search, speech to text conversion, translation and question and answer. These rich text applications and models have more stringent requirements for memory bandwidth than traditional CNN.

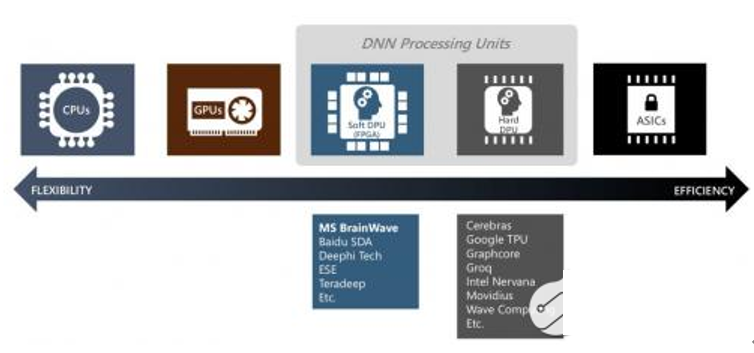

If combined with the requirements of "low latency" and "high bandwidth", the traditional solution to the traditional deep learning model and hardware is to pruning and compressing the neural network, thereby reducing the size of the model until satisfying limited hardware resources of NPU. However, the main problem with this approach is the inevitable loss of accuracy and quality of the model, and these losses are often irreparable.

In contrast, the FPGA resources in the Catapult platform can be considered as "infinite", so a large DNN model can be broken down into many small parts, each of which can be completely mapped to a single FPGA, and then each part to interconnect through a high-speed data center network. This not only ensures the low-latency and high-bandwidth performance requirements, but also maintains the integrity of the model without causing loss of accuracy and quality.

If you want to know more, our website has product specifications for CPU, you can go to ALLICDATA ELECTRONICS LIMITED to get more information