How to analysis of target recognition technology in embedded systems?

Object detection and recognition is an essential component of computer vision systems. In computer vision, the first step is to decompose the scene into components that the computer can see and analyze.

The first step in computer vision is feature extraction, which detects key points in an image and obtains meaningful information about these key points. The feature extraction process itself includes four basic stages: image preparation, keypoint detection, descriptor generation, and classification. In fact, the process checks each pixel to see if features exist in that pixel.

Feature extraction algorithms describe an image as a set of feature vectors that point to key elements in the image. This article will review a series of feature detection algorithms. In the process, we will see how general target recognition and specific feature recognition have undergone development over the years. Scale Invariant Feature Transform (SIFT) and Good Features To Track (GFTT) are early implementations of feature extraction technology. But these are computationally intensive algorithms that involve a large number of floating-point operations, so they are not suitable for real-time embedded platforms.

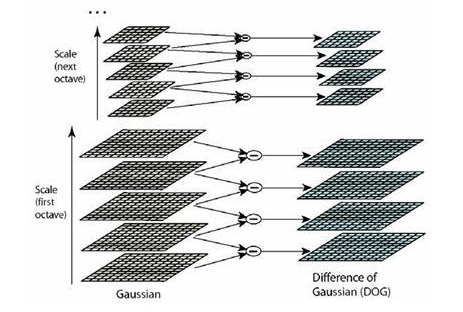

Taking SIFT as an example, this high-precision algorithm can produce good results in many cases. It finds features with sub-pixel precision, but keeps only features similar to corners. Also, although SIFT is very accurate, it is complex to implement in real time and often uses a lower input image resolution.

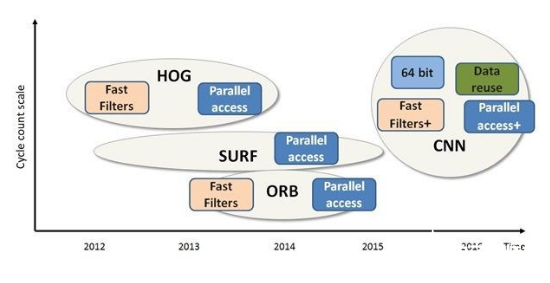

Therefore, SIFT is not commonly used at present, it is mainly used as a reference benchmark to measure the quality of the new algorithm. The need to reduce computational complexity ultimately led to the development of a new type of feature extraction algorithm that was easier to implement. Speeded Up Robust Features (SURF) was one of the first feature detectors to consider achieving efficiency. It replaces the vast array of operations in SIFT with a series of additions and subtractions in different rectangular sizes. Moreover, these operations are easy to vectorize and require less memory.

Next, Histograms of Oriented Gradients (HOG), a popular pedestrian detection algorithm commonly used in the automotive industry, can be varied, using different scales to detect objects of different sizes, and using the amount of overlap between blocks to improve detection quality, Does not increase the amount of calculation. It can take advantage of parallel memory access instead of processing only one lookup table at a time, as traditional storage systems do, thus speeding up lookups based on the degree of memory parallelism.

Oriented FAST and Rotated BRIEF (ORB), an efficient algorithm to replace SIFT, will then use binary descriptors to extract features. The ORB combines the increase in direction with the FAST corner detector and rotates the BRIEF descriptor to align with the corner direction. The combination of binary descriptors with lightweight functions such as FAST and Harris Corner produces a very computationally efficient and fairly accurate description graph.

Smartphones, tablets, wearables, surveillance systems, and automotive systems equipped with cameras use intelligent vision to bring the industry to a crossroads, requiring more advanced algorithms to implement computationally intensive applications, providing more User experience with intelligent environment adjustment. Therefore, once again the computational complexity needs to be reduced to accommodate the demanding requirements of the powerful algorithms used in these mobile and embedded devices.

Inevitably, the need for higher precision and more flexible algorithms will lead to vector-accelerated deep learning algorithms, such as convolutional neural networks (CNN), for classifying, locating, and detecting targets in images. For example, in the case of traffic sign recognition, CNN-based algorithms outperform all current target detection algorithms in recognition accuracy. In addition to high quality, the main advantage of CNNs over traditional object detection algorithms is that CNNs are very adaptive. It can be quickly "tuning" to adapt to new goals without changing the algorithm code. Therefore, CNN and other deep learning algorithms will become mainstream target detection methods in the near future.

CNN has very demanding computing requirements for mobile and embedded devices. Convolution is the main part of CNN calculation. CNN's two-dimensional convolutional layer allows users to take advantage of overlapping convolutions to improve processing efficiency by performing one or more filters on the same input simultaneously. So, for embedded platforms, designers should be able to perform convolutions very efficiently to take full advantage of CNN streams.

In fact, CNN is not strictly an algorithm, but an implementation framework. It allows users to optimize the basic building blocks and build an efficient neural network detection application. Because the CNN framework calculates each pixel individually, and the pixel-by-pixel calculation is a very demanding operation, it requires more calculations.

CEVA has found two other ways to increase computational efficiency while continuing to develop upcoming algorithms, such as CNN. The first is a parallel random memory access mechanism, which supports multi-scalar functions, allowing a vector processor to manage parallel load capabilities. The second is a sliding window mechanism, which can improve data utilization and prevent the same data from being repeatedly loaded multiple times. Most imaging filters and large input frame convolutions have a large amount of data overlap. This data overlap will increase as the vectorization of the processor increases, and can be used to reduce the data traffic between the processor and memory, which can reduce power consumption. This mechanism makes use of large-scale data overlap, allowing developers to freely implement efficient convolutions in deep learning algorithms, and will generally make DSP MAC operations extremely efficient.

The deep learning algorithm of target recognition has once again raised the threshold of computational complexity. Therefore, a new type of intelligent vision processor is needed. This vision processor should be able to improve processing efficiency and accuracy to meet the challenges. CEVA-XM4- CEVA's latest vision and imaging platform combines vision algorithm expertise with processor architecture technology to provide a well-designed vision processor to meet the challenges of embedded computer vision.

If you want to know more, our website has product specifications for the embedded systems, you can go to ALLICDATA ELECTRONICS LIMITED to get more information