What is the difference between CPU and GPU architecture?

First, let's take a look at the architecture of a general-purpose CPU.

l CPU

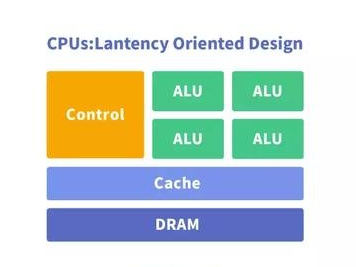

CPU (Central Processing Unit) central processing unit is a very large-scale integrated circuit. The main logical architecture includes control unit Control, arithmetic unit ALU and cache and data, control and connection and state bus between them.

Simply say, it is a calculation unit, a control unit, and a storage unit.

The architecture diagram is as follows:

The CPU follows the von Neumann architecture, and its core is stored procedures and sequential execution. The CPU architecture requires a lot of space to place the storage unit (Cache) and the control unit (Control). In contrast, the computing unit (ALU) occupies only a small part, so it is very popular in massive parallel computing capabilities. Restricted, and better at logic control.

The CPU can't do a lot of matrix data parallel computing, but the GPU can.

l GPU

The GPU (Graphics Processing Unit), a GPU (Graphics Processing Unit), is a massively parallel computing architecture consisting of a large number of computing units designed to handle multiple tasks simultaneously.

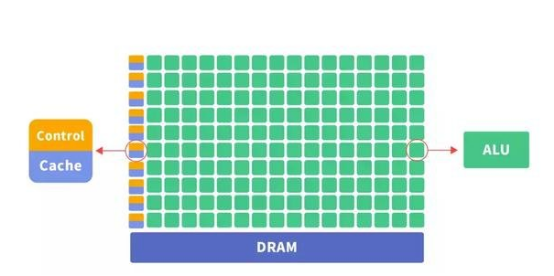

Why is the GPU capable of parallel computing? The GPU also contains basic computing units, control units, and storage units, but the GPU architecture is different from the CPU, as shown in the following figure:

Compared with the CPU, less than 20% of the CPU chip space is ALU, and more than 80% of the GPU chip space is ALU. That is, the GPU has more ALUs for data parallel processing.

In general, GPU processing neural network data is far more efficient than CPU.

Summary GPU has the following characteristics:

1, multi-threading, provides the infrastructure of multi-core parallel computing, and the core number is very large, can support the parallel computing of large amounts of data.

2. Have a higher speed of access.

3. Higher floating point computing power.

Therefore, the GPU is more suitable than the CPU for a large amount of training data, a large number of matrices, and convolution operations in deep learning.

Although the GPU has advantages in parallel computing capabilities, it does not work alone. It requires CPU co-processing. The construction of the neural network model and the transfer of data streams are still performed on the CPU. At the same time, there is a problem of high power consumption and large volume.