In the era of artificial intelligence, will FPGAs be more widely used in more applications?

Microsoft's initial practice of deploying and applying FPGAs on a large scale in the data center came from his "Catapult project." The main achievement of this project is to build an FPGA-based data center hardware acceleration platform, including all necessary hardware and software infrastructure. Through three phases of development, Catapult has successfully helped Microsoft deploy thousands of FPGA acceleration resources in its cloud data centers around the world.

Brainwave Project: System Architecture

The main goal of the Brainwave project is to leverage Catapult's large-scale FPGA infrastructure to provide automated deployment and hardware acceleration of deep neural networks to users without hardware design experience while meeting the real-time and low-cost requirements of systems and models.

In order to achieve this goal, the Brainwave Project proposes a complete software and hardware solution, which mainly includes the following three points:

A tool chain for automated zoning of trained DNN models based on resources and requirements;

System architecture for FPGA and CPU mapping of the divided submodels;

The NPU soft core and instruction set implemented and optimized on the FPGA.

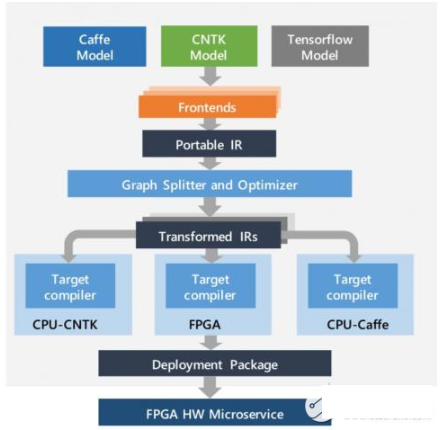

The diagram below shows the complete process for DNN acceleration using brainwave projects. For a trained DNN model, the tool first represents it as a form of a computational flow graph called the Intermediate Representation (IR).

The complete process of brainwave project DNN acceleration

Among them, the nodes of the graph represent tensor operations, such as matrix multiplication, etc., while the edges connecting the nodes represent the data flow between different operations, as shown in the following figure.

After the IR indicates that the tool is complete, the tool will continue to decompose the entire large image into several small images, so that each small image can be completely mapped to a single FPGA implementation. For operations and operations that may not be implemented on the FPGA that may be present in the model, they can be mapped to the CPU connected to the FPGA. This implements the DNN heterogeneous acceleration system based on the Catapult architecture.

In the specific logic implementation on the FPGA, in order to solve the two key requirements of “low latency” and “high bandwidth” mentioned above, the brainwave project adopts two main technical measures.

First, the brainwave project uses a custom narrow precision data bit width. This is actually a common method in the field of DNN acceleration. The project proposes 8~9 digit floating point number expressions, called ms-fp8 and ms-fp9. Compared to fixed-point expressions of the same precision, this expression requires roughly the same amount of logical resources, but can express a wider dynamic range and higher precision.

Compared to traditional 32-bit floating point numbers, the loss of precision using 8~9-bit floating point representation is small. It is worth noting that by retraining the model, the loss of precision caused by this method can be compensated.

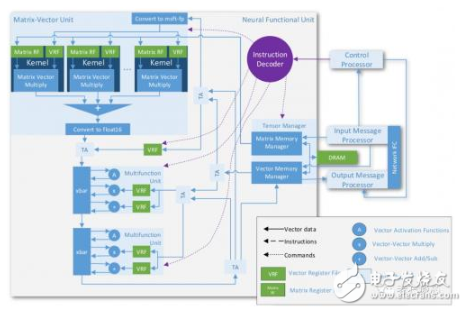

The core unit of the brainwave project is a soft core NPU implemented on the FPGA and its corresponding NPU instruction set. This soft core NPU is essentially a compromise between high performance and high flexibility. From a macro perspective, the hardware implementation of DNN can be implemented in a variety of ways, such as CPU, GPU, FPGA, or ASIC.The CPU has the highest flexibility, but the performance is not satisfactory; the ASIC solution is the opposite. FPGAs reach a good balance between performance and flexibility.

Second, the board-level DDR memory is completely deprecated, and all data storage is done through on-chip high-speed RAM. Compared to other solutions, whether using an ASIC or an FPGA, this is not possible with a single-chip solution.

On the Intel StraTIx 10 FPGA used in the Brainwave project, there are 11,721 512x40b SRAM modules, equivalent to 30MB of on-chip memory capacity and 35Tbps equivalent bandwidth at 600MHz operating frequency. This 30MB of on-chip memory is not enough for DNN applications, but it is the low-latency interconnect of Catapult-based very large-scale FPGAs that enables very limited on-chip RAM on a single FPGA to form a seemingly "unlimited" resource pool. A great breakthrough in the memory bandwidth limitations that have plagued DNN to accelerate application.

From a micro perspective, the FPGA solution itself can be used to the DNN implementation, which can be used to optimize the specific network structure by writing the underlying RTL.A high-level synthesis (HLS) approach can also be used to quickly describe the network structure through high-level language.

However, the former requires a wealth of FPGA hardware design and development experience, accompanied by a long development cycle; while the latter due to development tools and other constraints, the resulting hardware system is often difficult to meet the design requirements in performance.

Therefore, Microsoft adopted a soft core NPU and a specific instruction set. This approach combines performance on the one hand, enabling hardware engineers to further optimize the architecture and implementation of the NPU, and on the other hand, it also has flexibility, allowing software engineers to quickly describe the DNN algorithm through the instruction set.

The core of the NPU is an arithmetic unit MVU that performs matrix vector multiplication. It is deeply optimized for the underlying hardware structure of the FPGA and uses the above-mentioned "on-chip memory" and "low precision" methods to further improve system performance.

One of the most important features of the NPU is the "Super SIMD" instruction set architecture, which is similar to the GPU's SIMD instruction set, but one instruction of the NPU can generate more than one million operations, equivalent to the Intel StraTIx 10 FPGA. Implement 130,000 operations per clock cycle.

Brainwave project: performance improvement

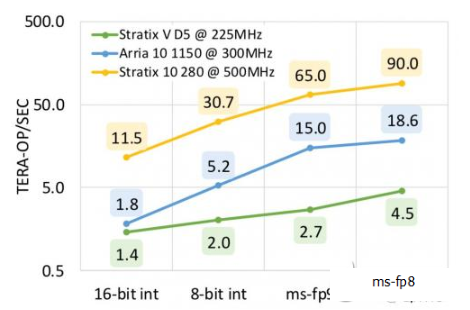

The peak performance of the brainwave NPU on different FPGAs is shown in the figure below. When using ms-fp8 precision, the brainwave NPU can get the peak performance of 90 TFLOPS on the StraTIx 10 FPGA. This data can also be compared with the existing high-end NPU.

The Brainwave project also