How does Microsoft launch an FPGA-based deep learning acceleration platform?

The Microsoft team has launched a new deep learning acceleration platform, code-named Project Brainwave, which will be briefly introduced by the heart of the machine. Brainwave plans to achieve significant performance and flexibility in deep learning model cloud services.

Microsoft designed the system for real-time artificial intelligence, which handles received requests with minimal latency. Cloud infrastructure can also handle real-time data streams, such as search queries, video, sensor streams, or interactions with users, so real-time AI becomes more important.

FPGA training and application of deep learning is becoming more and more important because FPGA: excellent reasoning performance in low batch size, ultra low latency on modern DNN, >10X and lower than CPU and GPU, in a single DNN The service extends to many FPGAs. FPGA is well suited to adapt to the rapid development of ML, CNN, LSTM, MLP, reinforcement learning, feature extraction, decision trees, etc., numerical precision of inference optimization, use of sparsity, deep compression of larger and faster models.

Microsoft has the world's largest cloud computing investment in FPGAs, multi-instance operations of AI's overall capabilities, and brainwave plans to run on Microsoft's scale infrastructure. So we released Project BrainWave, a scalable, FPGA-enabled DNN service platform with three features: low-volume DNN models with ultra-low latency, high-throughput services, adaptive numerical precision and custom operators, CNTK/ Caffe/TF/etc turnkey deployment.

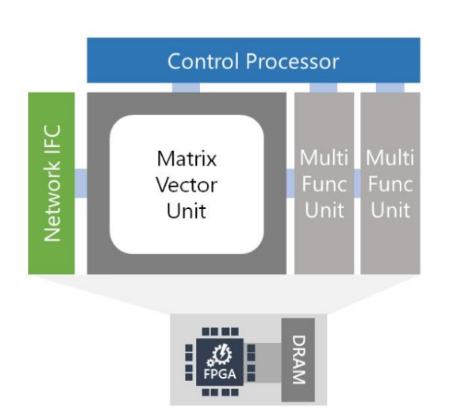

The main content of the brainwave planning system includes the following three levels: a hardware DNN engine integrated in the FPGA; a high-performance distributed system architecture; and a compiler and runtime for the low-friction deployment of the trained model.

First, the Brainwave program takes advantage of the large number of FPGA infrastructure that Microsoft has deployed over the years. By connecting high-performance FPGAs directly to our data center network, we can use DNN as a hardware microservice, where the DNN can be mapped to a remote FPGA pool and invoked by a server with no software in the loop.

This system architecture not only reduces latency (because the CPU does not need to process incoming requests), it also allows for very high throughput, and FPGA processing requests can be as fast as streaming over the network.

Second, the Brainwave program incorporates a software stack that supports multiple popular deep learning frameworks. We already support the Microsoft CogniTIve Toolkit and Google's Tensorflow, and we plan to support other frameworks. We have defined a graph-based intermediate representaTIon that we transform the model trained in the popular framework into an intermediate representation and then compile it into our high-performance infrastructure.

Third, the Brainwave program uses a powerful DNN processing unit (DPU) that is synthesized on commercially available FPGAs. A large number of companies, including large companies and a large number of startups, are building hardened DPUs. Although some of these chips have high peak performance, they must be designed with operators and data types in mind, which limits their flexibility. The Brainwave program takes another approach, offering a design that scales across a range of data types.

This design combines an ASIC digital signal processing block and synthesizable logic on the FPGA to provide a larger and more optimized number of functional units. This approach takes advantage of the flexibility of the FPGA in two ways. First, we have defined a highly customizable, narrow-precision data type that can improve performance without losing model precision. Second, we can quickly integrate research innovation into hardware platforms (usually weeks), which is critical in fast-moving spaces. As a result, we have achieved performance comparable to or even better than many hard-coded DPU chips, and today we deliver on performance promises.

Single-threaded C programming model (without RTL), ISA with specialized instructions: dense matrix multiplication, convolution, nonlinear excitation values, vector operations, embedding, a unique parameterizable narrow precision format, included in the float16 interface, Parameterizable microarchitecture, and extended to large FPGAs (~1M ALMs), fully integrated hardware microservices (with network), P2P protocol for CPU host and FPGA, easy to extend ISA with custom operators.

Optimized for matrix vector multiplication in batches 1, the matrix is distributed line by line on 1K-10K memory blocks of BRAM up to 20 TB/s, scalable to use all available BRAM, DSP and soft logic on the chip, The float 16 weight and activation values are converted in-place to an internal format, efficiently mapping dense dot product units to soft logic and DSP.

If you want to know more, our website has product specifications for the sensor, you can go to ALLICDATA ELECTRONICS LIMITED to get more information